Designing Data Intensive Applications — Book Notes

— summary, distributed systems — 51 min read

Chapter 1 - Reliable, Scalable, and Maintainable Applications

The Internet was done so well that most people think of it as a natural resource like the Pacific Ocean, rather than something that was man-made. When was the last time a technology with a scale like that was so error-free? — Alan Kay, in interview with Dr Dobb's Journal (2012)

- Many modern apps are data-intensive as opposed to compute-intensive.

- Data-intensive apps problems:

- Amount of data

- Complexity of data

- Speed at which it's changing

- Common data systems of data-intensive apps:

- Databases — Store data for later retrieval.

- Caches — Speed up subsequent reads of an expensive operation.

- Search indexes — Search/filter data in various ways.

- Stream processing — Asynchronous inter-process messaging.

- Batch processing — Periodically crunch accumulated data.

Thinking About Data Systems

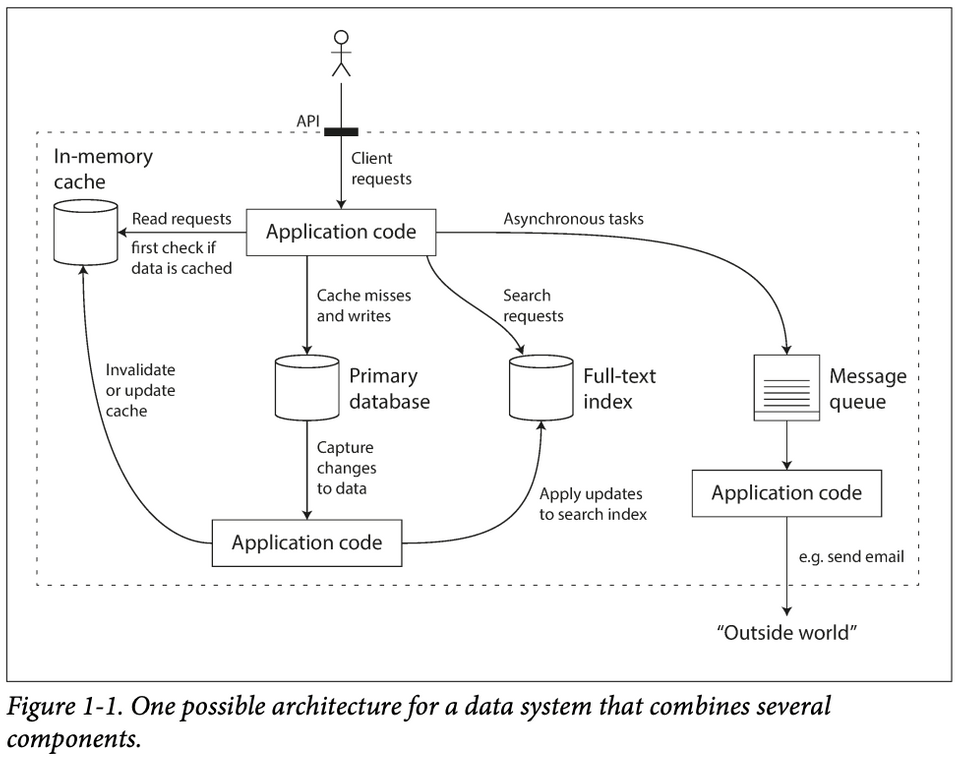

- While traditionally databases, queues, caches, etc are considered distinct category of tools, new tools blur the boundaries between these categories:

- Redis is a datastore also used as a message queue.

- Apache Kafka is a message queue with database-like durability gurantees.

- Modern apps have wide-ranging data storage & processing requirements that can't be satified by a single tool

- Modern apps are also (composite) data-systems made up of general-purpose data systems; they can offer certain gurantees & hide the foundational components behind an API.

This book focuses on three concerns that are important in most software systems:

i. Reliability

- Reliability means continuing to work correctly at the desired level of performance under the expected load even in the face of adversities like hardware & software faults; human error; etc.

- Fault is when one component of a system deviates from its spec.

- Failure is when the system as a whole stops providing the required service to the user.

PNote: Based on this definition, it's impossible to distinguish between a fault and a failure without context — as a component could also be a composite system itself.

- Systems that can anticipate and cope with faults are called fault tolerant or resilient.

- Faults could deliberately be introduced into systems to test its fault tolerance.

- It's impossible to reduce the probability of a fault to zero.

Hardware Faults

- Examples:

- Hard disk crash

- Faulty RAM

- Power blackout

- Accidental detachment of a network cable

- Hardware faults are random & independent: One machine's disk failing does not imply that another machine's disk is going to fail. There may be weak correlations, e.g: due to a common cause like the temperature in the server rack.

- Solutions: Redundancies.

- In the past, single machines sufficed for most apps as their hardware components already have redundancies.

- Multi-machine redundancies are gaining more usage as they offer additional benefits like rolling upgrades — where one node/machine can be patched at a time without downtime to the entire system.

- Hard disks are reported as having a mean time to failure (MTTF) of ~10-50 years. Thus, on a storage cluster with 10,000 disks, there should be an average of 1 failure per day.

Software Errors

- Software faults are systematic errors within a system. They are hard to anticipate and are correlated across nodes, leading to more system failures than hardware faults.

- Software faults often lie dormant until the false assumption about their environment suddenly stops being true.

- Solutions: No quick solution. A few small helpful tec:

- Carefully thinking about the assumptions & interactions in the system

- Thorough testing

- Process isolation

- Allowing processes to crash and restart

- Measuring, monitoring & analyzing system behavior in production

Human Errors

- (Unreliably) Humans are both the builders and operators of software systems.

- A study on large internet services found that configuration errors by operators were the leading cause of outages, whereas hardware faults played a role in only 10–25% of outages.

- Solutions:

- Fool proof design

- Isolation — Sandbox environments that decouple the places where people make the most mistakes from the places where they can cause failures.

- Unit & Integration testing

- Failsafe/Reversability

- Staged rollout

- Telemetry

i. Scalability

Scalability is used to describe a system’s ability to cope with increased load (data volume, traffic volume, complexity).

If the system grows in a particular way, what are our options for coping with the growth?

In order to discuss scalability, we first need ways of describing load and performance quantitatively.

Describing Load

- Load parameters are numbers describing the current load on the system.

- The best choice of parameters depends on the system's architecture:

- Web server — requests per second

- Database — ratio of reads to writes

- App — concurrent users

- Cache — hit rate

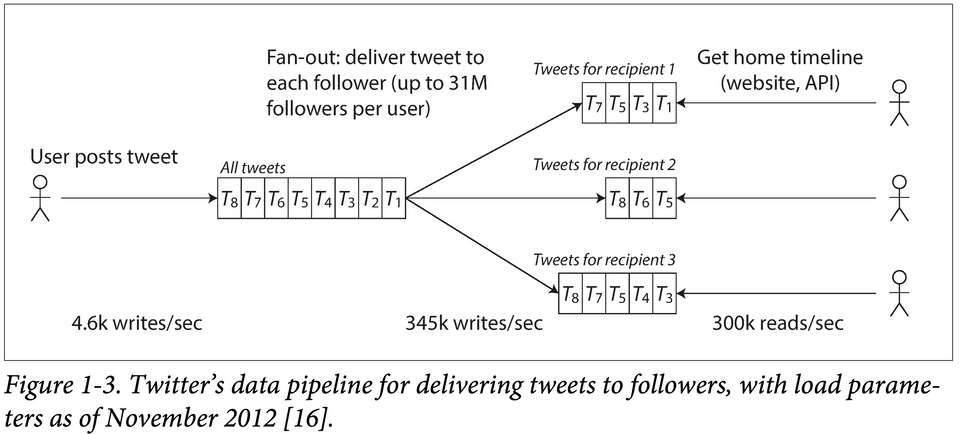

Case study — Twitter

Using data published in November 2012. Two of Twitter’s main operations are:

- Post tweet — A user can publish a new message to their followers (4.6k requests/sec on average, over 12k requests/sec at peak).

- Home timeline — A user can view tweets posted by the people they follow (300k requests/sec).

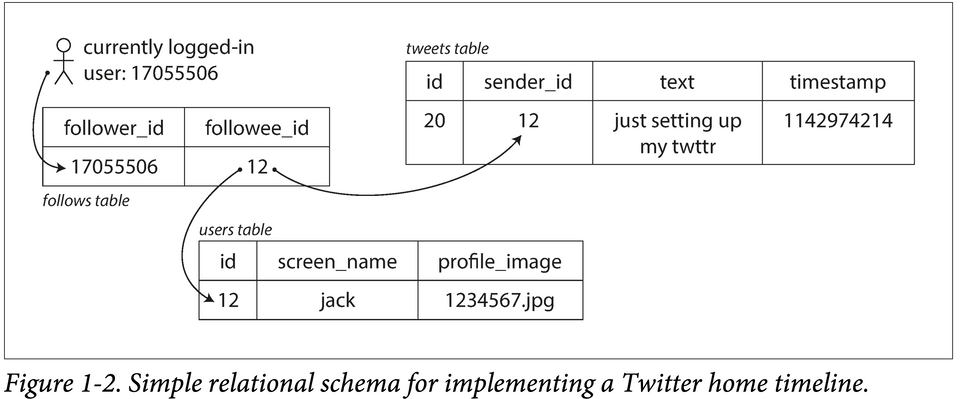

Two ways of implementing these two operations: Approach A

- Post tweet — inserts the new tweet into a global collection of tweets.

- Home timeline — Look up all the people they follow, find all the tweets for each of those users, and merge them (sorted by time).

1SELECT tweets.*, users.* FROM tweets2JOIN users ON tweets.sender_id = users.id3JOIN follows ON follows.followee_id = users.id4WHERE follows.follower_id = current_userApproach B Maintain a cache for each user’s home timeline — like a mailbox of tweets for each recipient user.

- Post tweet — look up all the people who follow that user, and insert the new tweet into each of their home timeline caches.

- Home timeline — read from the user's home timeline cache (cheap).

Twitter used a hybrid solution of Approach A & Approach B:

- It's preferable to do more work at write time and less at read time (Approach B) — given that the average rate of published tweets is almost two orders of magnitude lower than the rate of home timeline reads.

- For users with millions of followers, Approach B is slow as it leads to millions of writes. Twitter uses Approach A for these users.

Describing Performance

- Two ways to look at performance:

- When a load parameter is increased and the system resources is kept unchanged, how is the performance of the system affected?

- When a load parameter is increased, by how much should the resources be increased to keep performance unchanged?

- Response time = the time between a client sending a request and receiving a response = time to process the request (the service time) + latency.

- Latency is the duration that a request is waiting to be handled during which it is latent, awaiting service. E.g: network delays, queueing delays, etc.

- Systems' important performance metric (usually):

- Batch processing: Throughput — the number of records that can be processed per second.

- Online systems: Response time.

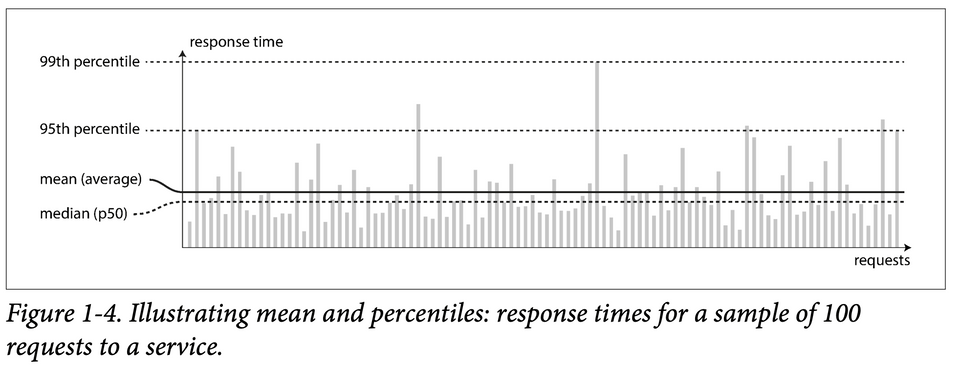

- Satisfying a request involves multiple disparate systems that can introduce random latencies — leading to random response time. Thus, response time is best thought of as a distribution of values that can be measured, instead of a single number.

- The mean is not a very good metric to know the “typical” response time, because it doesn’t tell how many users actually experienced that delay.

- Percentiles are preferred.

- 50th percentile = Median = p50

- 95th percentile = p95

- 99th percentile = p99

- 99.9th percentile = p999

- An

X-th percentile is the response time thresholds at whichX%of requests are faster than that particular threshold.E.g: if the 95th percentile response time is 1.5 seconds, that means 95 out of 100 requests take less than 1.5 seconds.

- High percentiles of response times, also known as

tail latenciesare used to figure out how bad the outliers are. - Amazon observed that a 100 ms increase in response time reduces sales by 1%. Others report that a 1-second slowdown reduces a customer satisfaction metric by 16%.

- It only takes a small number of slow requests to hold up the processing of subsequent requests — an effect sometimes known as

head-of-line blocking.When generating load artificially in order to test the scalability of a system, the load generating client needs to keep sending requests independently of the response time. If the client waits for the previous request to complete before sending the next one, that behavior has the effect of artificially keeping the queues shorter in the test than they would be in reality, which skews the measurements Everything You Know About Latency Is Wrong

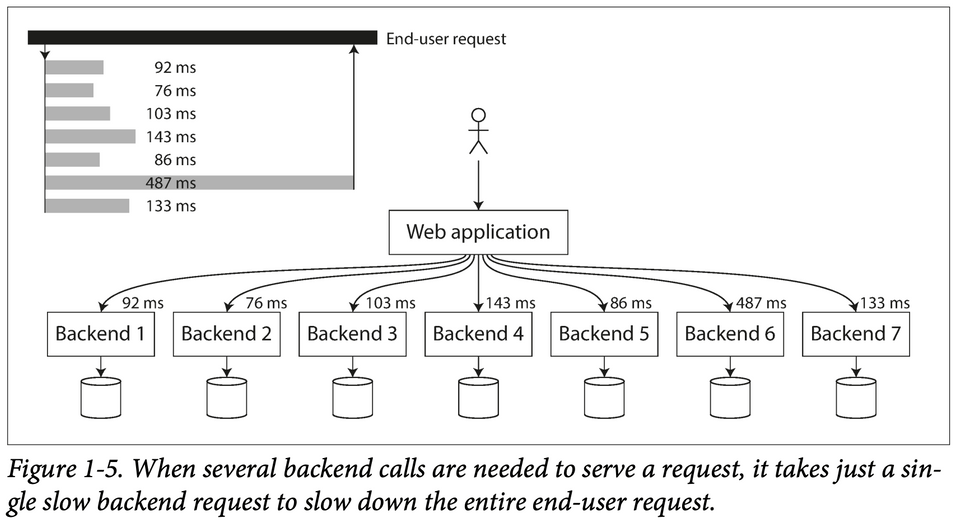

- Even if only a small percentage of backend calls are slow, the chance of getting a slow call increases if an end-user request requires multiple backend calls, and so a higher proportion of end-user requests end up being slow — an effect known as

tail latency amplification.

Percentiles in Practice

To add response time percentiles to the monitoring dashboards, they need to be efficiently calculated on an ongoing basis.

Example:

- Keep a rolling window of response times of requests in the last 10 minutes.

- Every minute, calculate the median and various percentiles over the values in that window and plot those metrics on a graph.

- Calculation implementation:

- Naïvely — keep a list of response times for all requests within the time window and sort that list every minute.

- Efficiently — there are algorithms that can calculate a good approximation of percentiles at minimal CPU and memory cost, such as:1- [Forward decay](https://ieeexplore.ieee.org/document/4812398)2 - [t-digest](https://www.sciencedirect.com/science/article/pii/S2665963820300403)3 - [HdrHistogram](http://hdrhistogram.org/)

Beware that averaging percentiles, e.g., to reduce the time resolution or to combine data from several machines, is mathematically meaningless — the right way of aggregating response time data is to add the histograms [28].

Approaches for Coping with Load

how do we maintain good performance even when our load parameters increase by some amount?

- How to scale machines:

- Scale up — Scale vertically, i.e: moving to a more powerful machine. A system that can run on a single machine is often simpler, but there is a limit to how powerful a single machine can be.

- Scale out — Scale horizontally, i.e: distributing the load across multiple smaller machines. Distributing load across multiple machines is aka a

shared-nothing architecture.- How to scale out machines:

- Elasticly — automatically add computing resources when load increase is detected. Useful when load is highly unpredictable.

- Manually — a human analyzes the capacity and decides to add more machines to the system. This is simpler and may have fewer operational surprises.

- An architecture that scales well for a particular app is built around assumptions of which operations will be common and which will be rare — the load parameters. This means that the architecture of systems that operate at large scale is usually highly specific to the application.

iii. Maintainability

- It is well known that the majority of the cost of software is not in its initial development, but in its ongoing maintenance.

- Three design principles for maintainable software systems:

- Operability — Make it easy for operations teams to keep the system running smoothly. Good operability means having good visibility into the system’s health and having effective ways of managing it.

- Simplicity — Make it easy for new engineers to understand the system, by removing as much complexity as possible from the system. Good abstractions can help reduce complexity and make the system easier to modify and adapt for new use cases.

- Evolvability — Make it easy for engineers to make changes to the system in the future, adapting it for unanticipated use cases as requirements change.

Chapter 2 — Data Models & Query Language

This chapter looks at 3 general-purpose data models for data storage and querying:

- Relational model

- Document model

- Graph models

- Data models affect:

- How software is written

The limits of my language mean the limits of my world. — Ludwig Wittgenstein, Tractatus Logico-Philosophicus (1922)

- How the problem being solved is thought about

- How software is written

- Most apps are built using layered data models. For each layer, the key question is:

How is it represented in terms of the next-lower layer

- Data-model layering example:

- App developers model the real world in terms of data-structures (usually) specific to their app.

- To store those data-structures, they are expressed in terms of a general-purpose data model like: JSON/XML documents, SQL tables, graph models, etc.

- The database represents the general-purpose data model in terms of bytes in memory, on disk, or on a network.

- At the hardware level, bytes are represented in terms of electrical currents, pulses of light, magnetic fields, etc.

- Each layer hides the complexity of the layers below it by providing a "clean data model". Abstractions like these allow different group of people to work together effectively.

Relational Model Versus Document Model

- SQL is based on the relational model which was proposed by Edgar Codd in 1970:

- Data is organized into relations (called tables in SQL)

- Each relation is an unordered collection of tuples (rows in SQL)

- The roots of relational databases lie in business data processing, which was performed on mainframes in the 1960s-1970s:

- Transaction processing (like entering sales/banking transactions)

- Batch processing (like payroll)

Other databases at that time forced application developers to think a lot about the internal representation of the data in the database. The goal of the relational model was to hide that implementation detail behind a cleaner interface.

- Each competitor to the relational model generated a lot of hype in its time, but it never lasted:

- Network model (1970s & 1980s)

- Hierachical model (1970s & 1980s)

- Object databases (1980s & 1990s)

- XML databases (2000s)

- Relational databases turned out to generalize well, beyond their original scope of business data processing, to a broad variety of use cases.

The Birth of NoSQL

- NoSQL doesn't refer to any particular technology — it was coined as a catchy Twitter hastag for a meetup.

- Retroactively, it's interpreted as Not Only SQL.

- Some driving forces behind the adoption of NoSQL databases:

- Scalability: for very large datasets or very high write throughput.

- Preference for open-source.

- Specialized query operations.

- More expressive data model.

- Flexible/Schemaless.

Polyglot persistence is a term that refers to using multiple data storage technologies for varying data storage needs within an app.

The Object-Relational Impedance Mismatch

If data is stored in relational tables, an awkward translation layer is required between the objects in the application code (written in an OOP language) and the database model of tables, rows, and columns.

- Object-relational mapping (ORM) frameworks like ActiveRecord and Hibernate reduce the amount of boilerplate code required for this translation layer only to an extent.

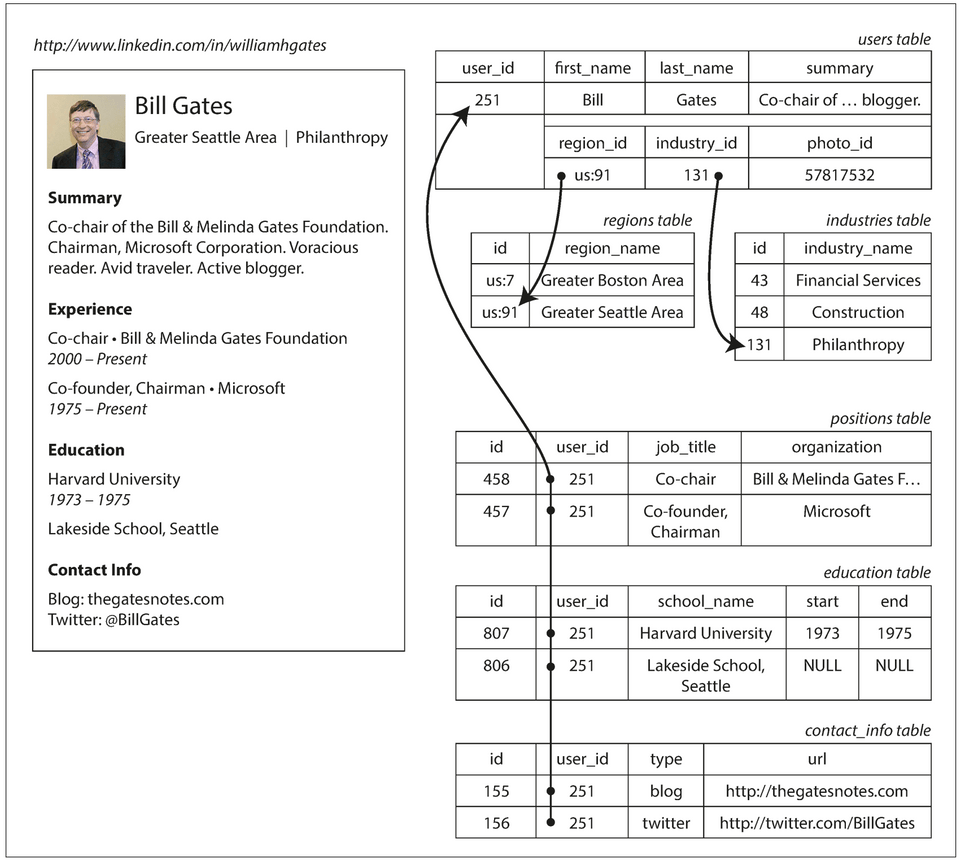

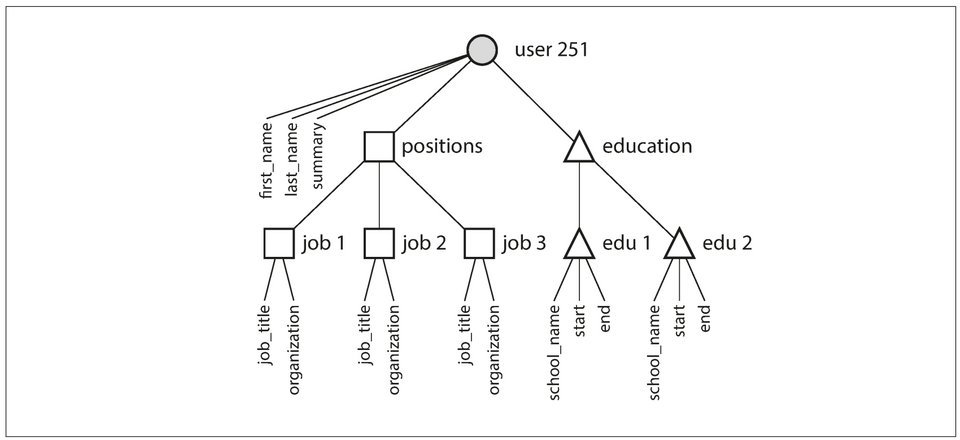

Representing a LinkedIn profile using a relational schema

Figure 2-1- The profile as a whole can be identified by a unique identifier,

user_id. - One-to-one relationships — Fields like

first_nameandlast_nameare modeled as columns on the users table because they appear exactly once per user. - One-to-many relationships — Users can have more than one career position, varying periods of education, and contact information. Ways to represent:

- The common normalized representation is to put positions, education, and contact information in separate tables, with a

foreign keyreference to the users table. - Later versions of the SQL standard added support for structured datatypes and XML data; this allowed multi-valued data to be stored within a single row, with support for querying and indexing inside those documents.

- Encode jobs, education, and contact info as a JSON or XML document, store it on a text column in the user table, and let the application interpret its structure and content.

- The common normalized representation is to put positions, education, and contact information in separate tables, with a

Representing a LinkedIn profile using JSON (Document Data Model)

1{2 "user_id": 251,3 "first_name": "Bill",4 "last_name": "Gates",5 "summary": "Co-chair of the Bill & Melinda Gates... Active blogger.",6 "region_id": "us:91",7 "industry_id": 131,8 "photo_url": "/p/7/000/253/05b/308dd6e.jpg",9 "positions": [10 {11 "job_title": "Co-chair",12 "organization": "Bill & Melinda Gates Foundation"13 },14 {15 "job_title": "Co-founder, Chairman",16 "organization": "Microsoft"17 }18 ],19 "education": [20 {21 "school_name": "Harvard University",22 "start": 1973,23 "end": 197524 },25 {26 "school_name": "Lakeside School, Seattle",27 "start": null,28 "end": null29 }30 ],31 "contact_info": {32 "blog": "http://thegatesnotes.com",33 "twitter": "http://twitter.com/BillGates"34 }35}- Pros:

- Can reduce the impeadance mismatch between the app code and storage layer.

- Schema flexibility.

- Better locality — All the data is in one place, available with one query — compared to the relational example which requires:

- Multiple queries or

- Joins between the users table and its subordinate tables.

- Appropriate for a self-contained document like this.

- Simpler than XML.

- The one-to-many relationships imply a tree structure in the data, and the JSON representation makes this explicit:

Figure 2.2

Figure 2.2

Many-to-One and Many-to-Many Relationships

- In the preceding section,

region_idandindustry_idare given as IDs, not as plain-text strings.Whether to store an ID or a text string is a question of duplication:

- With an ID, the information that is meaningful to humans (such as the word "Philanthropy") is stored in only one place, and everything that refers to it uses an ID (which only has meaning within the database).

- With the text directly, you are duplicating the human-meaningful information in every record that uses it. Maintaining consistency or updating all the redundant copies is difficult.

- The advantage of using an ID is that because it has no meaning to humans, it never needs to change: the ID can remain the same, even if the information it identifies changes.

- Removing such duplication is the key idea behind

normalizationin databases. - Normalizing this data requires many-to-one relationships, which don’t fit nicely into the document model:

- many people work in one particular industry

- In relational databases, it’s normal to refer to rows in other tables by ID, because joins are easy.

- In document databases, joins are not needed for one-to-many tree structures, and support for joins is often weak.

- If the database itself does not support joins, the app developer have to emulate a join in app code by making multiple queries to the database.

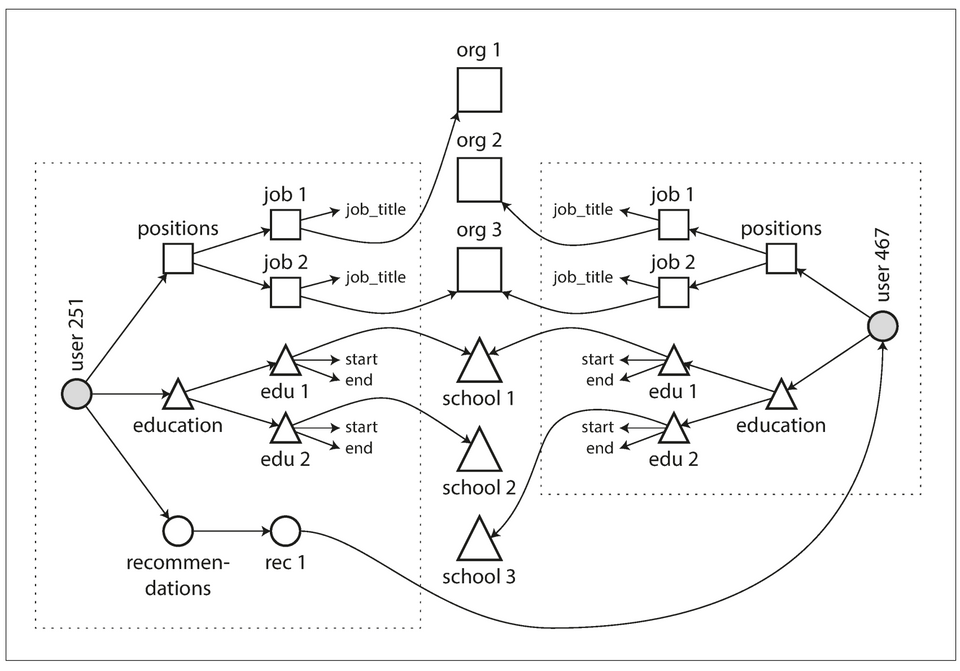

- The profile in the preceeding section can have new product requirements that introduce many-to-many relationships. E.g:

- Making organizations and schools as entities (i.e: tables with features) instead of plain string.

- User recommendations

Figure 2.4: The data within each dotted rectangle can be grouped into one document, but the references to organizations, schools, and other users need to be represented as references, and require joins when queried.

Figure 2.4: The data within each dotted rectangle can be grouped into one document, but the references to organizations, schools, and other users need to be represented as references, and require joins when queried.

Are Document Databases Repeating History?

Hierarchical model

- Popular in the 1970s. It represented all data as a tree of records nested within records, much like the JSON structure of Fig2.2

- Cons:

- Worked well for one-to-many relationships, but it made many-to-many relationships difficult.

- No support for joins.

- Two models were proposed to solve the limitations of the hierarchical model:

a. The network/CODASYL model

- Standardized by a committee called the Conference on Data Systems Languages (CODASYL).

- The CODASYL model was a generalization of the hierarchical model:

- In the hierarchical model, every record has exactly one parent.

- In the network model, a record could have multiple parents.

- For example, there could be one record for the "Greater Seattle Area" region, and every user who lived in that region could be linked to it. This allowed many-to-one and many-to-many relationships to be modeled.

The links between records in the network model were not foreign keys, but more like pointers in a programming language (while still being stored on disk). The only way of accessing a record was to follow a path from a root record along these chains of links. This was called an access path.

- Cons:

- Difficult to make changes to the app's data model — because this usually requires changing all the handwritten database query code to handle the new access paths

- Complexity in app code — A query in CODASYL was performed by moving a cursor through the database by iterating over lists of records and following access paths.

b. The relational model

- What the relational model did, by contrast, was to lay out all the data in the open: a relation (table) is simply a collection of tuples (rows). There are no complicated access paths to follow if you want to look at the data.

- The query optimizer automatically decides which parts of the query to execute in which order, and which indexes to use. Those choices are effectively the “access path”.

Comparison to document databases

- Document databases reverted back to the hierarchical model in one aspect: storing nested records within their parent record rather than in a separate table.

However, when it comes to representing many-to-one and many-to-many relationships, relational and document databases are not fundamentally different: in both cases, the related item is referenced by a unique identifier, which is called a

foreign keyin the relational model and adocument referencein the document model. That identifier is resolved at read time by using a join or follow-up queries. To date, document databases have not followed the path of CODASYL.

Relational Versus Document Databases Today

Which data model leads to simpler application code?

- This question hinges on the relationships that exist between data items.

- For tree like structures, the document model shines.

- For many-to-many or many-to-one relationships, the relational model shines.

With the document model you cannot refer directly to a nested item within a document, but instead you need to say something like “the second item in the list of positions for user 251” (much like an access path in the hierarchical model).

Schema flexibility in the document model

- Schema-on-read: the structure of the data is implicit, and only interpreted when the data is read. Also known as schemaless, but this term is misleading, as the code that reads the data usually assumes some kind of structure—i.e., there is an implicit schema, but it is not enforced by the database.

- Schema-on-write: the traditional approach of relational databases, where the schema is explicit and the database ensures all written data conforms to it.

- Most document databases are schema-on-read.

- Schema-on-read is similar to dynamic (runtime) type checking in programming languages, whereas schema-on-write is similar to static (compile-time) type checking.

Data locality for queries

- A document is usually stored as a single continuous string, encoded as JSON, XML, BSON (MongoDB), etc.

- This locality leads to less disk seek and can lead to better performance ONLY if a large part of the document is needed at the same time.

The idea of grouping related data together for locality is not limited to the document model. For example, Google’s Spanner database offers the same locality properties in a relational data model, by allowing the schema to declare that a table’s rows should be interleaved (nested) within a parent table.

Convergence of document and relational databases

- Most modern relational databases have support for JSON and XML documents.

- Some document databases either have support for relational like joins (like RethinkDB) or handle them in their client-side driver (MongoDB).

Query Languages for Data

- In a declarative query language, like SQL or relational algebra, the pattern of the data required is specified — what conditions the results must meet, and how the data should be transformed (e.g., sorted, grouped, and aggregated) — but not how to achieve that goal. It is up to the database system’s query optimizer to decide which indexes and which join methods to use, and in which order to execute various parts of the query.1SELECT * FROM animals WHERE family = 'Sharks';

- An imperative language tells the computer to perform certain operations in a certain order. Imagine stepping through the code line by line, evaluating conditions, updating variables, and deciding whether to go around the loop one more time.1function getSharks() {2 var sharks = [];3 for (var i = 0; i < animals.length; i++) {4 if (animals[i].family === "Sharks") {5 sharks.push(animals[i]);6 }7 }8 return sharks;9}

- Pros of declarative query language:

- Concise.

- Easier to work with.

- Hides implementation details of the database.

- It's less powerful, giving the database room for auto optimizations.

- Lends itself to parallel execution

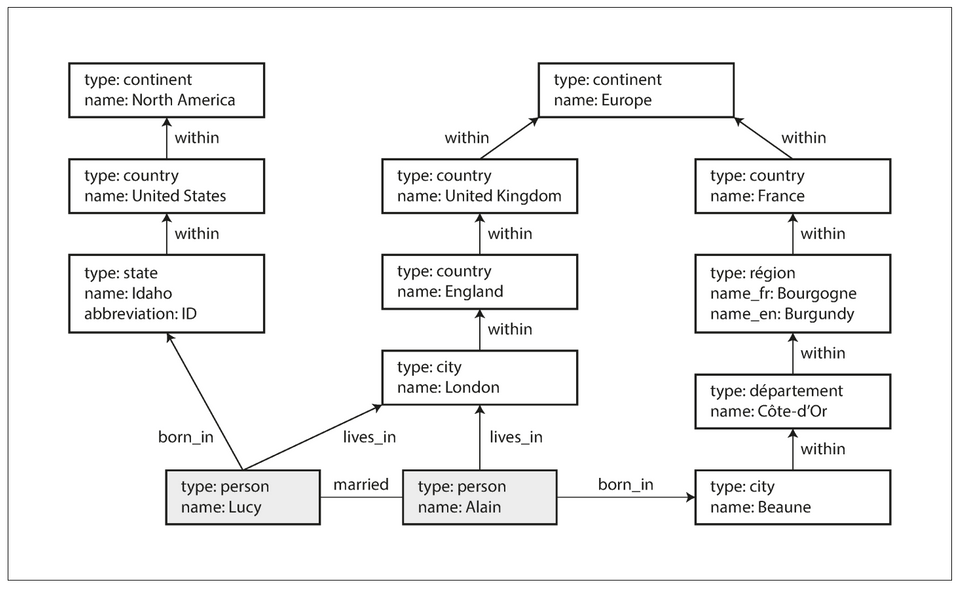

Graph-Like Data Models

- A graph consists of two kinds of objects:

- There are several different, but related, ways of structuring and querying data in graphs.

Property Graphs

In the property graph model, each vertex consists of:

- A unique identifier

- A set of outgoing edges

- A set of incoming edges

- A collection of properties (key-value pairs)

Each edge consists of:

- A unique identifier

- The vertex at which the edge starts (the tail vertex)

- The vertex at which the edge ends (the head vertex)

- A label to describe the kind of relationship between the two vertices

- A collection of properties (key-value pairs)

Relational equivalent:

- one table for vertices.

- one table for edges.

- The head and tail vertex are stored for each edge; to get the set of incoming or outgoing edges for a vertex, query the edges table by

head_vertexortail_vertex, respectively.

1CREATE TABLE vertices (2 vertex_id integer PRIMARY KEY,3 properties json4);56CREATE TABLE edges (7 edge_id integer PRIMARY KEY,8 tail_vertex integer REFERENCES vertices (vertex_id),9 head_vertex integer REFERENCES vertices (vertex_id),10 label text,11 properties json12);1314CREATE INDEX edges_tails ON edges (tail_vertex);15CREATE INDEX edges_heads ON edges (head_vertex);Some important aspects of this model are:

- Any vertex can have an edge connecting it with any other vertex. There is no schema that restricts which kinds of things can or cannot be associated. This is useful for evolvability: E.g: Fig 2.5 can be extended to include allergen vertices, which can have edges to people and food items that contain such substances.

- Given any vertex, you can efficiently find both its incoming and its outgoing edges, and thus traverse the graph—i.e., follow a path through a chain of vertices — both forward and backward.

- By using different labels for different kinds of relationships, you can store several different kinds of information in a single graph, while still maintaining a clean data model.

The Cypher Query Language

Cypher is a declarative query language for property graphs, created for the Neo4j graph database.

- A subset of the data in [Fig 2.5](#fig2.5), represented as a Cypher query1CREATE2 (NAmerica:Location {name:'North America', type:'continent'}), // Vertex symbolic name: NAmerica3 (USA:Location {name:'United States', type:'country' }),4 (Idaho:Location {name:'Idaho', type:'state' }),5 (Lucy:Person {name:'Lucy' }),6 (Idaho) -[:WITHIN]-> (USA) -[:WITHIN]-> (NAmerica), // creates an edge labeled WITHIN, with Idaho as the tail node and USA as the head node.7 (Lucy) -[:BORN_IN]-> (Idaho)

Query: Find the names of all the people who emigrated from the United States to Europe.

1MATCH2 (person) -[:BORN_IN]-> () -[:WITHIN*0..]-> (us:Location {name:'United States'}),3 (person) -[:LIVES_IN]-> () -[:WITHIN*0..]-> (eu:Location {name:'Europe'})4RETURN person.name56// `(person) -[:BORN_IN]-> ()` matches any two vertices that are related by an edge labeled `BORN_IN`. The tail vertex of that edge is bound to the variable `person`, and the head vertex is left unnamed.Interpretation: Find any vertex (call it

person) that meets both of the following conditions:personhas an outgoingBORN_INedge to some vertex. From that vertex, you can follow a chain of outgoingWITHINedges until eventually you reach a vertex of typeLocation, whose name property is equal to "United States".- That same

personvertex also has an outgoingLIVES_INedge. Following that edge, and then a chain of outgoingWITHINedges, you eventually reach a vertex of typeLocation, whose name property is equal to "Europe".

For each such person vertex, return the name property.

Graph Queries in SQL

- While graph data can be queried with SQL, it is more difficult:

In a relational database, you usually know in advance which joins you need in your query. In a graph query, you may need to traverse a variable number of edges before you find the vertex you’re looking for — that is, the number of joins is not fixed in advance. In the previous example, this happens in the

() -[:WITHIN*0..]-> ()rule in the Cypher query. - Since SQL:1999, this idea of variable-length traversal paths in a query can be expressed using something called recursive common table expressions (the

WITH RECURSIVEsyntax).

Triple-Stores and SPARQL

- The triple-store model is mostly equivalent to the property graph model, using different words to describe the same ideas.

- In a triple-store, all information is stored in the form of very simple three-part statements:

(subject, predicate, object), e.g: (Jim, likes, bananas) - The subject of a triple is equivalent to a vertex in a graph. The object is one of two things:

- Primitive data: e.g:

(lucy, age, 33). The predicate and object of the triple are equivalent to the key and value of a property on the subject vertex. - Vertex: e.g:

(lucy, marriedTo, alain). The predicate is the label of an edge in the graph, the subject is the tail vertex, and the object is the head vertex.

- Primitive data: e.g:

- A subset of the data in [Figure 2.5](#fig2.5), represented as Turtle triples1@prefix : <urn:example:>.2_:lucy a :Person.3_:lucy :name "Lucy".4_:lucy :bornIn _:idaho.5_:idaho a :Location.6_:idaho :name "Idaho".7_:idaho :type "state".8_:idaho :within _:usa.9_:usa a :Location.10_:usa :name "United States".11_:usa :type "country".12_:usa :within _:namerica.13_:namerica a :Location.14_:namerica :name "North America".15_:namerica :type "continent".

The SPARQL query language

Query: The same query as before — finding people who have moved from the US to Europe:

1PREFIX : <urn:example:>23SELECT ?personName WHERE {4 ?person :name ?personName.5 ?person :bornIn / :within* / :name "United States".6 ?person :livesIn / :within* / :name "Europe".7}The structure is very similar. The following two expressions are equivalent (variables start with a question mark in SPARQL):

1// Cypher2(person) -[:BORN_IN]-> () -[:WITHIN*0..]-> (location)1# SPARQL2?person :bornIn / :within* ?location.

Graph Databases Compared to the Network Model

- In a graph database, any vertex can have an edge to any other vertex. In CODASYL, a database had a schema that restricted/controlled nesting.

- In a graph database, you can refer directly to any vertex by its unique ID, or you can use an index to find vertices with a particular value. In CODASYL, you have to use the record's access path.

- In a graph database, vertices and edges are not ordered (you can only sort the results when making a query). In CODASYL, the children of a record were an ordered set.

- Graph databases support high-level declarative query languages. CODASYL relied on imperative queries.

The Foundation: Datalog

Datalog is a much older language than SPARQL or Cypher.

It's the query language of Datomic.

Cascalog is a Datalog implementation for querying large datasets in Hadoop.

Datalog’s data model is similar to the triple-store model. Instead of writing a triple as

(subject, predicate, object), it's written aspredicate(subject, object).- A subset of the data in [Figure 2.5](#fig2.5), represented as Datalog facts1name(namerica, 'North America').2type(namerica, continent).3name(usa, 'United States').4type(usa, country).5within(usa, namerica).6name(idaho, 'Idaho').7type(idaho, state).8within(idaho, usa).9name(lucy, 'Lucy').10born_in(lucy, idaho).

Query: The same query as before — finding people who have moved from the US to Europe:

1within_recursive(Location, Name) :- name(Location, Name). /* Rule 1 */23within_recursive(Location, Name) :- within(Location, Via), /* Rule 2 */45within_recursive(Via, Name).67migrated(Name, BornIn, LivingIn) :- name(Person, Name), /* Rule 3 */8 born_in(Person, BornLoc),9 within_recursive(BornLoc, BornIn),10 lives_in(Person, LivingLoc),11 within_recursive(LivingLoc, LivingIn).1213?- migrated(Who, 'United States', 'Europe').14/* Who = 'Lucy'. */We define rules that tell the database about new predicates. Here, we define:

within_recursiveandmigrated. These predicates aren’t triples stored in the database, but instead they are derived from data or from other rules.In rules, words that start with an uppercase letter are variables. For example, name

(Location, Name)matches the triplename(namerica, 'North America')with variable bindingsLocation = namericaandName = 'North America'.

Chapter 3 — Storage & Retrieval

Chapter 4 - Encoding & Evolution

- Compatibility is a relationship between one process that encodes the data, and another process that decodes it.

- Backward compatibility: Newer code can read data that was written by older code.

- Forward compatibility: Older code can read data that was written by newer code.

- Schemaless databases can contain a mixture of data in older and newer formats.

- Database with schemas enforce data conformity as schema changes.

Formats for Encoding Data

- Programs usually work with data in two different representation:

- In-memory: Data is kept in objects, arrays, lists, hash tables, etc.

- Self-contained byte sequence: Used when writing data to a file or network.

- Translation is needed between the two representations:

- In-memory to byte sequence — Known as serialization, or encoding, or marshalling

- Byte sequence to in-memory — Known as deserailization, or decoding, or unmarshalling, or parsing.

Language-Specific Formats

- Many programming languages come with built-in support for encoding in-memory objects into byte sequences:

- Java has java.io.Serializable.

- Ruby has Marshal.

- Python has pickle, ...

- Pro:

- Convenient: It's already included in the programming language.

- Cons:

- Coupled to the programming language.

- Security concern as arbitrary classes can be instantiated.

- Versoning is absent or abysmal.

- Inefficiencies in CPU usage and size of encoded structure.

JSON, XML, & CSV Formats

- These are standardized textual (human readable) encoding formats that are used in many programming languages.

- XML is verbose and unnecessarily complicated; JSON is popular and simpler than XML; CSV is less powerful than both.

- Cons:

- Number encoding ambiguity: JSON doesn't distinguish integers and floating-point numbers; XML and CSV doesn't distinguish numbers and strings (without an external schema).

- JSON & XML don't support binary strings (sequences of bytes without a character encoding). Albeit inefficient, Base64 encoding binary data is used to circumvent this limitation.

- Optional & complicated schema support for XML & JSON. Consumers that don't use the schema have to hardcode the appropriate encoding/decoding logic (for correct interpretation: Is it base64 encoded? is it a number?).

- No schema support for CSV. Applications define the meaning of each column & row.

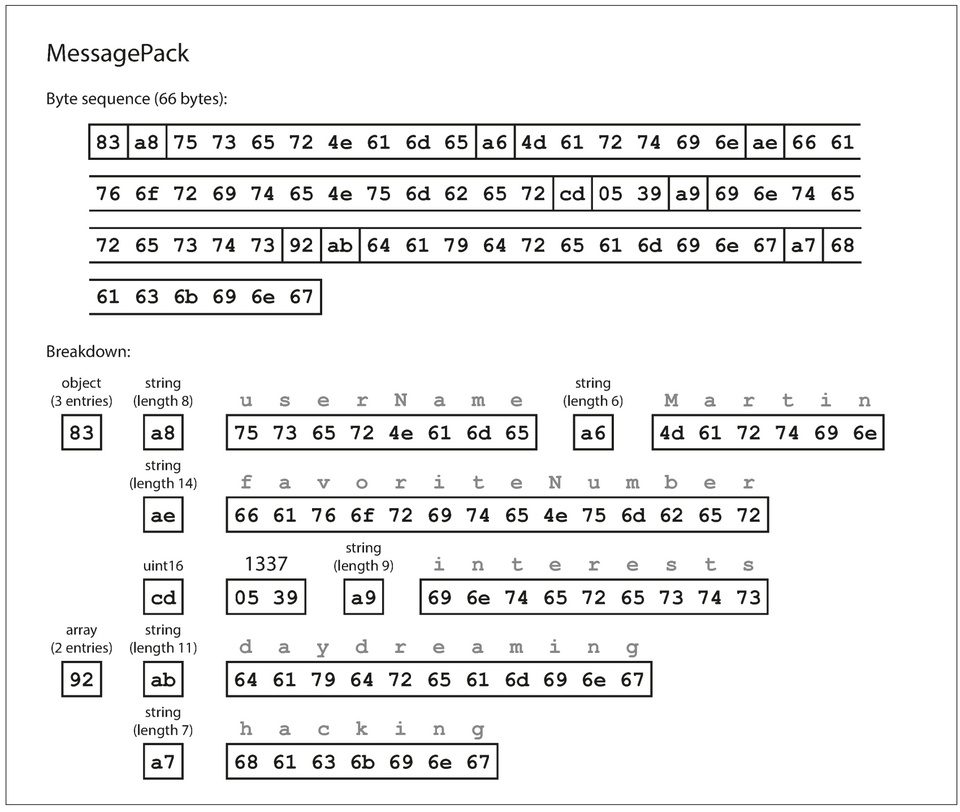

Binary Encoding

1{2 "userName": "Martin",3 "favoriteNumber": 1337,4 "interests": ["daydreaming", "hacking"]5}- Binary encoding format for JSON: MessagePack, BSON, BJSON, UBJSON, BISON, Smile, ...

- Binary encoding format for XML: WBXML, Fast Infoset, ...

- These binary formats can extend the set of datatypes:

- Distinguishing integers & floating-point numbers

- Binary string support

- Because these binary formats don't pescribe a schema, they need to include all the object field names within the encoded data.

- The example record encoded with MessagePack is

66 byteslong, compared to the81 bytestaken by the textual JSON encoding (with whitespace removed).

- The downside of binary encoding is that data needs to be decoded before it is human-readable.

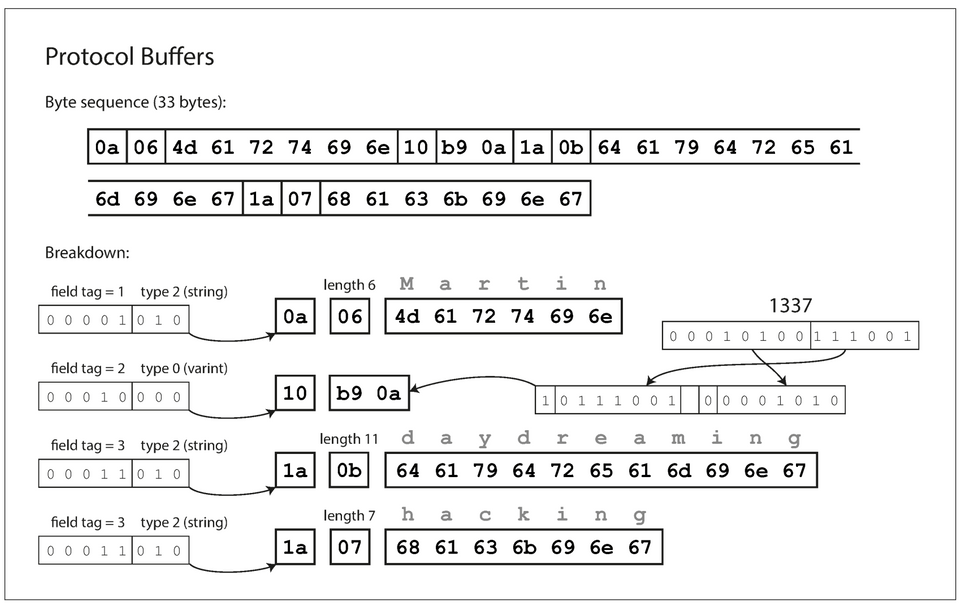

Thrift & Protocol Buffers

Apache Thrift & Protocol Buffers (protobuf) are binary encoding formats.

Both have code generation tools that use a required schema definition to produce classes that implement the schema in various programming languages.

Schema for the [example record](#example4.1) in Thrift IDL.1struct Person {2 1: required string userName,3 2: optional i64 favoriteNumber,4 3: optional list<string> interests5}Schema for the [example record](#example4.1) with Protobuf.1message Person {2 required string user_name = 1;3 optional int64 favorite_number = 2;4 repeated string interests = 3;5}Required/optional markers are only used for runtime verification.

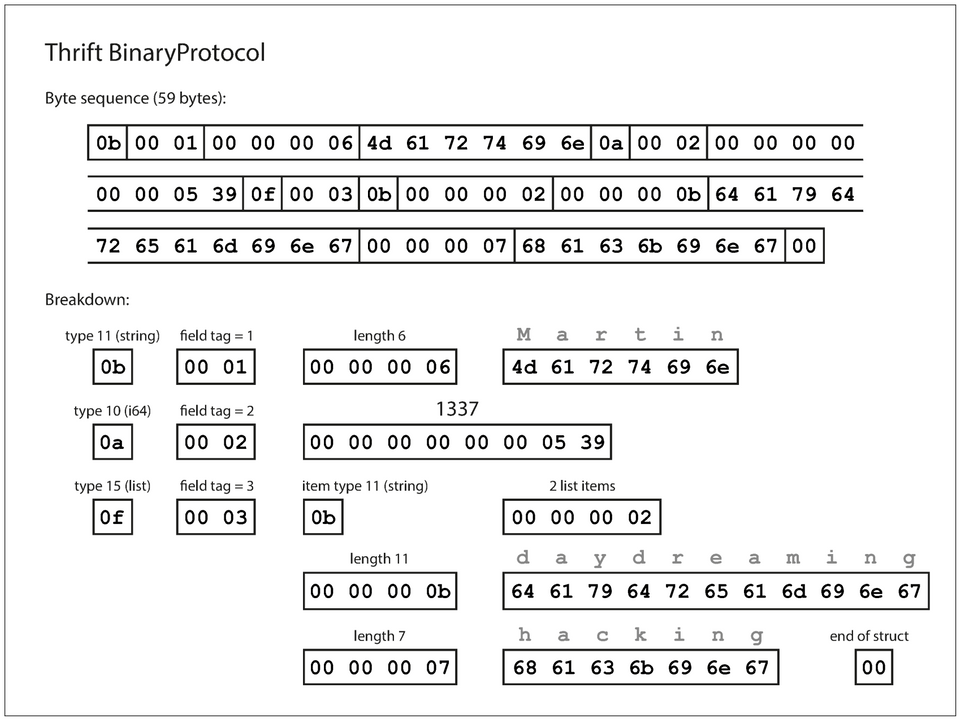

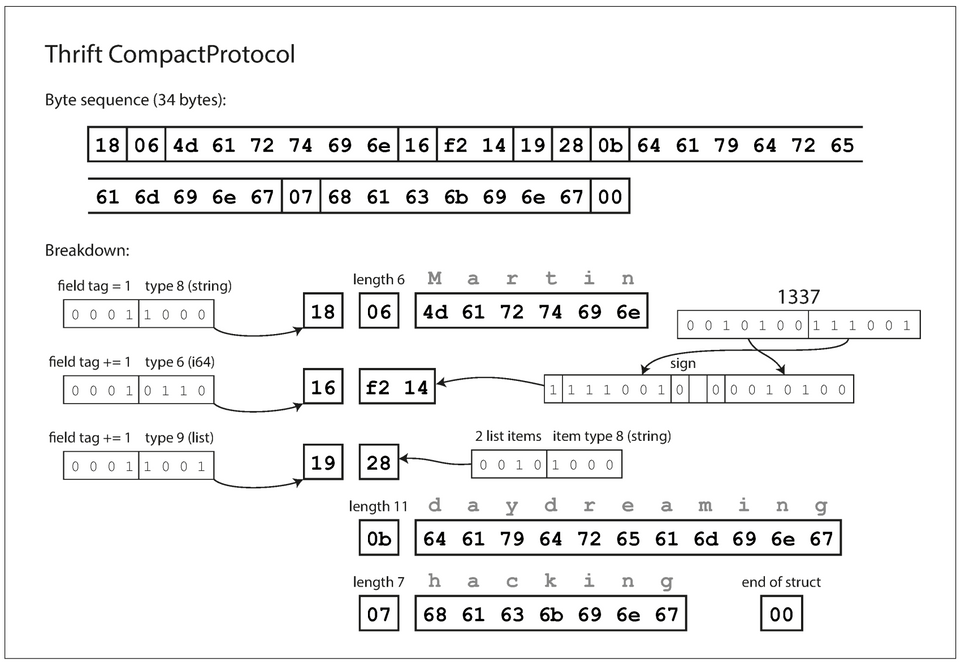

Thrift has two encoding formats: BinaryProtocol & CompactProtocol.

Example record encoded with Thrift's BinaryProtocol takes

59 bytes.

Note: No field names — Instead field tags from the schema definition is used.

Thrift's CompactProtocol is semantically equivalent to BinaryProtocol, but packs the same information into

34 bytes.

- It packs the field tag & type into one byte

- Uses variable length integers: Rather than using a full eight bytes for the number 1337, it's encoded in two bytes, with the top bit of each byte used to indicate whether there are still more bytes to come. This means numbers between –64 and 63 are encoded in one byte, numbers between –8192 and 8191 are encoded in two bytes, etc.

Example record encoded with Protobuf takes

33 bytes.

Field tags & Schema evolution

- An encoded record is just a concantenation of its encoded fields. Each field is identified by its tag number and annotated with a datatype.

- Unset field are omitted from the encoded record.

Maintaining forward-compatibility

- Field names can be changed, but field tag numbers can't be changed without invalidating existing encoded data.

- Fields can be added to a schema, using distinct tag numbers — Old code simply ignores it.

- Required fields can't be removed afterwards.

Maintaining backward-compatibility

- New code can read old data as long as field tag numbers are unique.

- New fields can't be required as old code can't write it. They must be optional or have a default value.

- Removed fields tag numbers must be reserved.

Datatype & Schema evolution

- Changing the datatype for numbers is possible, but could lose precision or get truncated.

- Protobuf has a

repeatedmarker instead of a list or array datatype — this allows evolution from single-valued fields into repeated (multi-valued) fields of the same type. - Thrift has a dedicated list datatype, which has the advantage of supporting nested lists.

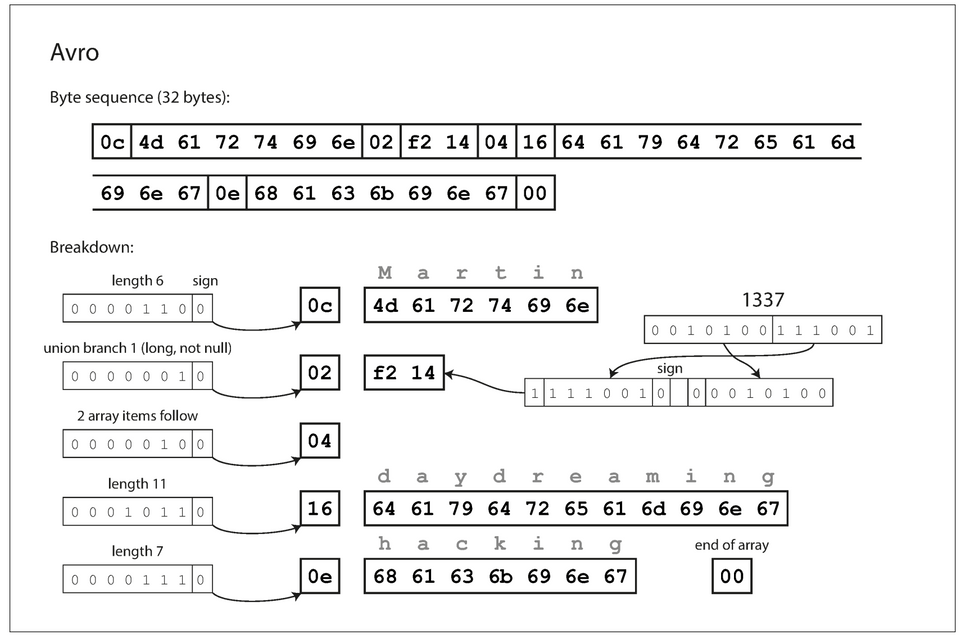

Avro

- Apache Avro is a binary encoding format.

- Apache Avro have two schema languages:

- Avro IDL (intended for human editing)

1record Person {2 string userName;3 union { null, long } favoriteNumber = null;4 array<string> interests;5}- JSON (more machine readable)

1{2 "type": "record",3 "name": "Person",4 "fields": [5 {6 "name": "userName",7 "type": "string"8 },9 {10 "name": "favoriteNumber",11 "type": ["null", "long"],12 "default": null13 },14 {15 "name": "interests",16 "type": {17 "type": "array",18 "items": "string"19 }20 }21 ]22} - Example record encoded with Avro takes

32 bytes.

- Compared to Thrift or Protobuf, there is no field tag number with an annotated data-type in the encoded data, instead, the schema is used to determine the order and data-type of fields in the encoded data. Thus the writer and reader schemas' must be compatible.

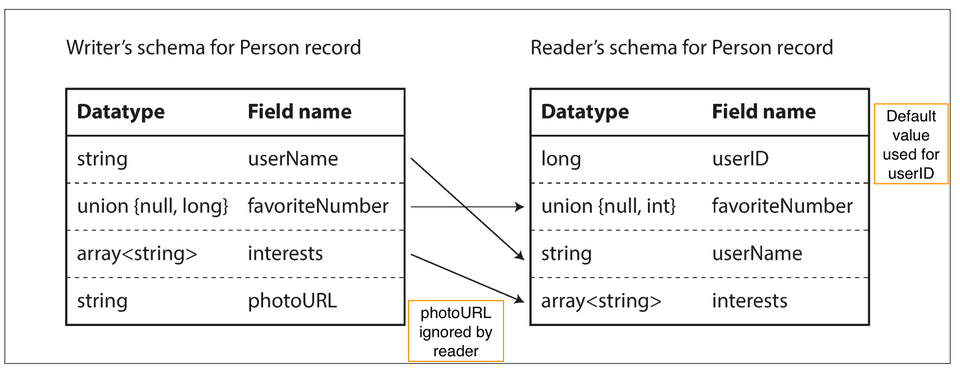

Writer & Reader Schema

- The writer’s schema and the reader’s schema don’t have to be the same — they only need to be compatible.

- During decoding, the Avro library resolves the differences by looking at the writer’s schema and the reader’s schema side by side and translating the data from the writer’s schema into the reader’s schema.

The Merits of Schemas

- Compact since field names can be omitted.

- Schema is a form of documentation.

- Database of schema enables validation of backward & forward compatibility.

- Code-gen from the schema enables compile-time type checking in statically typed languages.

Modes of Dataflow

Dataflow Through Databases

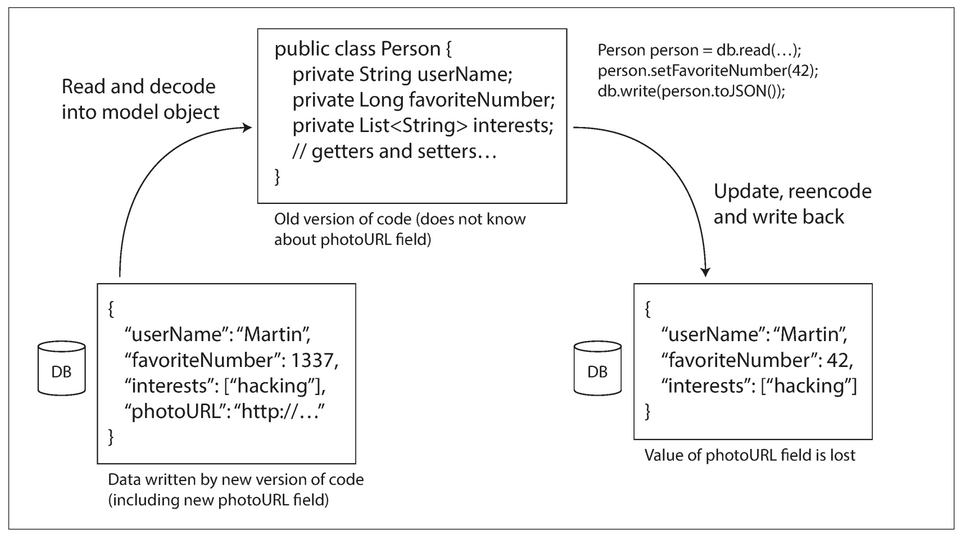

- In a database, the process that writes to the database encodes the data, and the process that reads from the database decodes it.

- Data outlives code.

- When an older version of the application updates data previously written by a newer version of the application, data may be lost if you’re not careful.

Dataflow Through Services: REST and RPC

- Client-server is a common arrangement for processes that want to communicate over a network.

- The servers expose an API over the network, and the clients can connect to the servers to make requests to that API. The API exposed by the server is known as a service.

- The client encodes a request, the server decodes the request and encodes a response, and the client finally decodes the response.

- An application can be decomposed into smaller services by area of functionality, such that one service is a client of another. This is known as

Service oriented architecture (SOA)orMicroservices architecture.

Web services

- When HTTP is used as the underlying protocol for talking to the service, it's called a web service. This is a misnomer tho as web services are not only used on the web.

- REST is not a protocol, but rather a design philosophy that builds upon the principles of HTTP. It emphasizes simple data formats, using URLs for identifying resources and using HTTP features for cache control, authentication, and content type negotiation.

- An API designed according to the principles of REST is called RESTful.

- A definition format such as OpenAPI (aka Swagger), can be used to describe RESTful APIs and produce documentation.

- By contrast, SOAP is an XML-based protocol for making network API requests. Although it is most commonly used over HTTP, it aims to be independent from HTTP and avoids using most HTTP features.

- The API of a SOAP web service is described using an XML-based language called the Web Services Description Language, or WSDL.

The problems with remote procedure calls (RPCs)

- The RPC model tries to make a request to a remote network service look the same as calling a local function (this abstraction is called location transparency).

- RPC is flawed because a network request is different from a local function call:

- A local function call is predictable and either succeeds or fails, depending only on parameters that are under your control.

- A network request can timeout. A local function call always: returns a result or throws an exception or never returns.

- Network request responses' can get lost.

- A network request latency is wildly variable.

- References (pointers) to objects in local memory can be efficiently passed to local functions.

- Translation of data-types is necessary if the client & server are written in different programming languages.

Current directions for RPC

- Thrift and Avro come with RPC support included.

- gRPC is an RPC implementation using Protocol Buffers.

- Finagle uses Thrift.

- Rest.li uses JSON over HTTP.

- The new generation of RPC frameworks are more explicit about the fact that a remote request is different from a local function call.

Message-Passing Dataflow

- Asynchronous message-passing systems are somewhere between RPC and databases:

- They are similar to RPC in that a client’s request (usually called a message) is delivered to another process with low latency.

- They are similar to databases in that the message is not sent via a direct network connection, but goes via an intermediary called a

message broker(also called a message queue or message-oriented middleware), which stores the message temporarily.

- Using a message broker has several advantages compared to direct RPC:

- It can act as a buffer if the recipient is unavailable or overloaded, and thus improve system reliability.

- It can automatically redeliver messages to a process that has crashed, and thus prevent messages from being lost.

- It avoids the sender needing to know the IP address and port number of the recipient.

- It allows one message to be sent to several recipients.

- It logically decouples the sender from the recipient (the sender just publishes messages and doesn’t care who consumes them).

- Message-passing communication is usually one-way: a sender normally doesn’t expect to receive a reply to its messages.

- This communication pattern is asynchronous: the sender fires & forget.

Message brokers

- Open source implementations: RabbitMQ, ActiveMQ, HornetQ, NATS, Apache Kafka, etc.

- The detailed delivery semantics vary by implementation and configuration, but in general, message brokers are used as follows:

One process sends a message to a

named queue/topic, and thebrokerensures that the message is delivered to one or moreconsumers oforsubscribers tothatqueue/topic. There can be many producers and many consumers on the same topic. - Message brokers typically don’t enforce any particular data model — a message is just a sequence of bytes with some metadata.

- Messages are encoded by the sender and decoded by the recipient.

Distributed actor frameworks

- The actor model is a programming model for concurrency in a single process:

- Rather than dealing directly with threads, logic is encapsulated in actors.

- Each actor typically represents one client or entity, it may have some local state (which is not shared with any other actor)

- It communicates with other actors by sending and receiving asynchronous messages.

- Message delivery is not guaranteed: in certain error scenarios, messages will be lost.

- Since each actor processes only one message at a time, it doesn’t need to worry about threads, and each actor can be scheduled independently by the framework.

- In distributed actor frameworks, this programming model is used to scale an application across multiple nodes. Messages are transparently encoded into a byte sequence, sent over the network, and decoded on the other side.

- Location transparency works better in the actor model than in RPC, because the actor model already assumes that messages may be lost, even within a single process.

- A distributed actor framework essentially integrates a message broker and the actor programming model into a single framework.

- Three popular distributed actor frameworks:

- Akka

- Orleans

- Erlang OTP

Aside: "

Rolling upgradesis where a new version of a service is gradually deployed to a few nodes at a time, rather than deploying to all nodes simultaneously. Rolling upgrades allow new versions of a service to be released without downtime (thus encouraging frequent small releases over rare big releases) and make deployments less risky (allowing faulty releases to be detected and rolled back before they affect a large number of users)."

Chapter 5 — Replication

The major difference between a thing that might go wrong and a thing that cannot possibly go wrong is that when a thing that cannot possibly go wrong goes wrong it usually turns out to be impossible to get at or repair. — Douglas Adams, Mostly Harmless (1992)

- Replication means keeping a copy of the same data on multiple machines that are connected via a network.

- Benefits:

- Low latency: Data can be kept geographically close to users.

- High availability: The system can continue working even if some machines fail.

- Scalability: Increased throughput with many machines.

- Each node that stores a copy of the database is called a replica.

Single leader replication

- aka: leader-based, active/passive or master–slave replication.

- How it works:

- One of the replicas is designated the

leader(aka: master or primary). All writes goes to the leader. - The other replicas are known as

followers(aka: read replicas, slaves, secondaries, or hot standbys). - Whenever data is written to the leader, it also sends the data change to all of its followers as part of a replication log or change stream. The followers use this log to update their local copy of the database.

- A client can read from either the leader or any of the followers. Followers are read-only from the client's POV.

- One of the replicas is designated the

- Used by:

- Relational databases: PostgreSQL, MySQL.

- Nonrelational databases: MongoDB, RethinkDB, and Espresso.

- Distributed message brokers: Kafka, RabbitMQ.

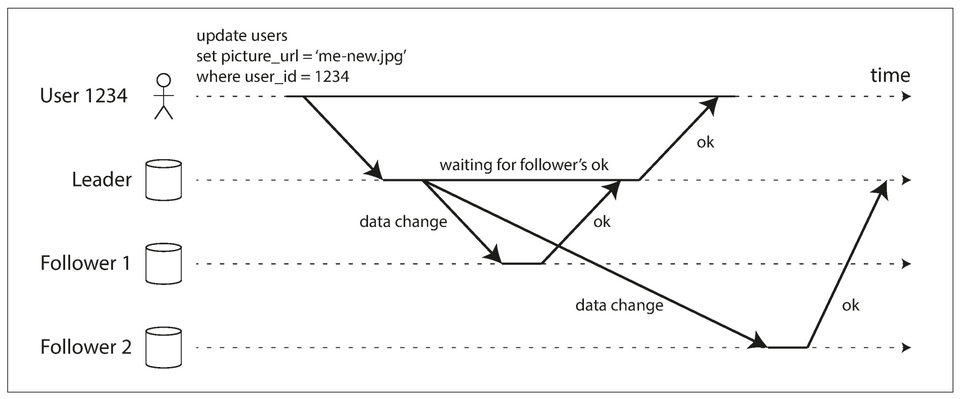

Synchronous Versus Asynchronous Replication

- Semi-synchronous example: The replication to follower 1 is synchronous, and the replication to follower 2 is asynchronous.

- Advantage of synchronous replication: the follower is guaranteed to have an up-to-date copy of the data that is consistent with the leader.

- Disadvantage of synchronous replication: Writes can't be processed if the synchronous replica is unavailable.

- Advantage of full asynchronous replication: the leader can continue processing writes, even if all of its followers have fallen behind.

- Disadvantage of full asynchronous replication: writes are not guaranteed to be durable, even if it has been confirmed to the client.

Setting Up New Followers

- Setting up a new follower without downtime:

- Take a consistent snapshot of the leader’s database at some point in time. A standard file copy is insufficient as the database can be modified in between the copy operation.

- Copy the snapshot to the new follower node.

- The follower connects to the leader and requests all the data changes that have happened since the snapshot was taken.

- When the follower has processed the backlog of data changes since the snapshot we say it has caught up.

Handling Node Outages

How to achieve high availability with leader-based replication.

Follower failure: Catch-up recovery

- After a failure, a follower can recover by requesting from the leader all the changes between its last processed transaction and now. Similar to the last two steps mentioned in setting up new followers.

Leader failure: Failover

- Failover is the process of handling a leader failure:

- one of the followers needs to be promoted to be the new leader,

- clients need to be reconfigured to send their writes to the new leader, and

- the other followers need to start consuming data changes from the new leader.

- An automatic failover process usually consists of the following steps:

- Determining that the leader has failed: Timeouts are usually used as there is no better alternative.

- Choosing a new leader: Through an election process or a single elected controller node. The replica with the most recent changes is the best candidate given this minimizes data loss.

- Reconfiguring the system to use the new leader and ensuring that the old leader becomes a follower whenever it comes back on the network.

- Failover is fraught with things that can go wrong:

- If asynchronous replication is used, the new leader may not have received all the writes from the old leader before it failed, leading to potential data loss.

- Discarding writes is especially dangerous if other storage systems outside of the database need to be coordinated with the database contents.

- In certain fault scenarios, it could happen that two nodes both believe that they are the leader. This situation is called split brain and it can lead to data loss or corruption.

- What is the right timeout before the leader is declared dead?

- Short timeout: can lead to unncessary failovers.

- Long timeout: leads to longer recovery times.

Implementation of Replication Logs

Several different leader-based replication methods are used in practice.

Statement-based replication

- The leader logs every write request (statement) that it executes and sends that statement log to its followers.

- For a relational database, this means that every

INSERT,UPDATE, orDELETEm statement is forwarded to followers. - Issues:

- Nondeterministic functions (like

NOW(),RAND()) in statements can produce different values on replicas. - If a statement depends on existing data in the database, they must be executed in exactly the same order on each replica in order to have the same effect.

- Statements that have side effects (e.g: triggers, stored procedures, user-defined functions) may result in different side effects occurring on each replica.

- Nondeterministic functions (like

- Some of the issues can be addressed, like replacing

NOW()with an exact date-time on the leader. However, there are so many edge cases. - VoltDB uses statement-based replication, and makes it safe by requiring transactions to be deterministic.

Write-ahead log (WAL) shipping

- As discussed in Chapter 3, storage engines maintain a log that is an append-only sequence of bytes containing all writes to the database.

- This log can be used to build a replica on another node: besides writing the log to disk, the leader also sends it across the network to its followers.

- Disadvantages:

- Couples replication to the storage engine: the log describes the data on a very low level — a WAL contains details of which bytes were changed in which disk blocks. If the database changes its storage format, upgrading to this version will require downtime if replicas can't use the same log.

- Used by: PostgreSQL, Oracle, etc.

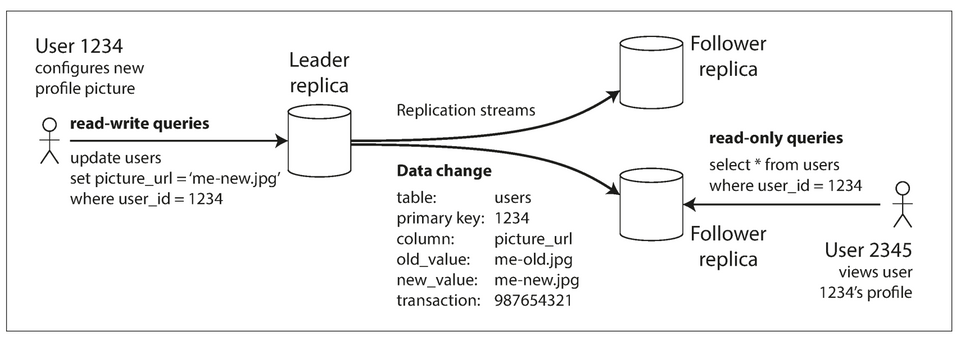

Logical (row-based) log replication

- An alternative is to use different log formats for replication and for the storage engine, which allows the replication log to be decoupled from the storage engine internals.

- This kind of replication log is called a

logical log, to distinguish it from the storage engine’s (physical) data representation. - A logical log for a relational database is usually a sequence of records describing writes to database tables at the granularity of a row:

- For an inserted row, the log contains the new values of all columns.

- For a deleted row, the log contains enough information to uniquely identify the row that was deleted.

- For an updated row, the log contains enough information to uniquely identify the updated row, and the new values.

Trigger-based replication

- Unlike the methods above that are done at the database level, this is done at the application level.

- Triggers and stored procedures can be used to implement this.

- Pros compared to database level replication:

- Flexible

- Cons compared to database level replication:

- Greater overhead

- Prone to bugs and limitations

Problems with Replication Lag

- Replication lag is the delay between a write happening on the leader and being reflected on a follower.

- With asynchronous replication, replication lag can lead to inconsistencies between a follower and the leader.

- This inconstencies is temporary as eventually the follower catches up with the latest data. This effect is known as eventual consistency.

Reading Your Own Writes

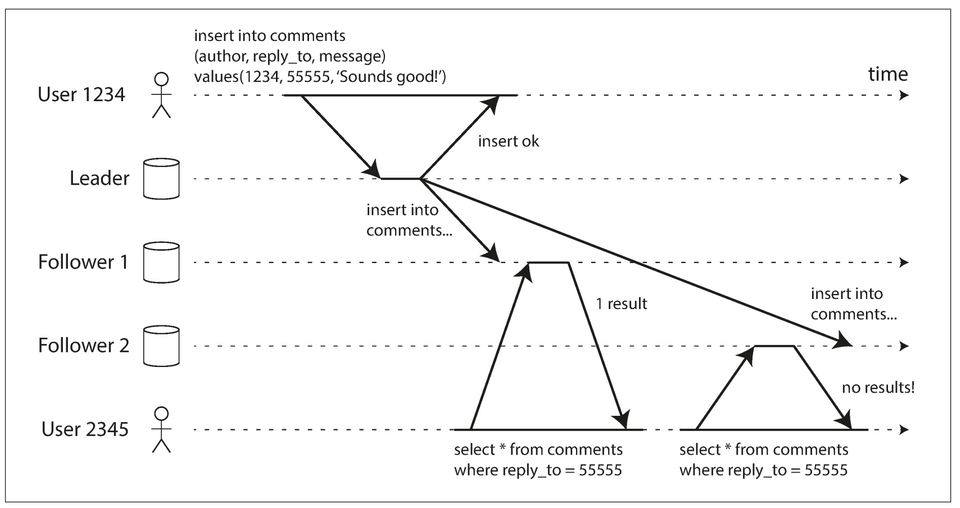

- Issue: A user makes a write, followed by a read from a stale replica. To prevent this anomaly, we need read-after-write/read-your-writes consistency.

- This is a consistency gurantee that a writer will see all their latest writes whenever they read.

- Ways to implement:

- When reading something that the user may have modified, read it from the leader; otherwise, read it from a follower. E.g: Always read a user's own profile from the leader, other profiles can be read from followers. This doesn't work well if most things are editable by the user.

- Prevent reads to lagging replicas for a duration of time.

- The client can remember the timestamp of its most recent write — then the system can ensure that the replica serving any reads for that user reflects updates at least until that timestamp. This doesn't work if a user uses multiple devices.

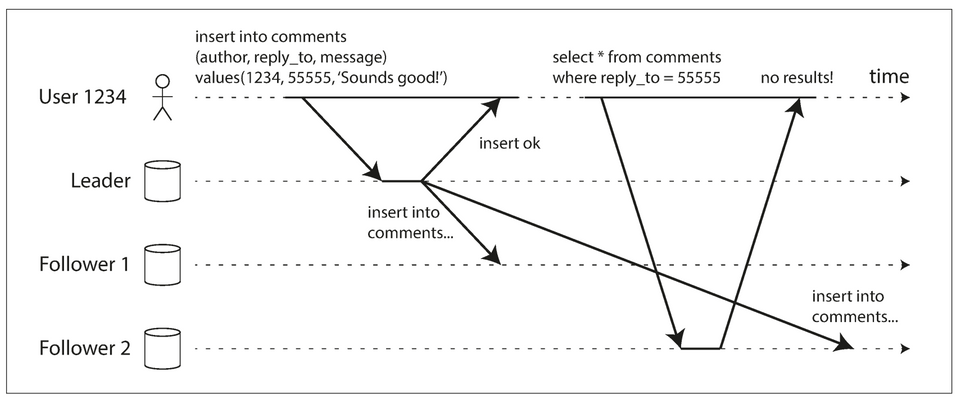

Monotonic Reads

- A user first reads from a fresh replica, then from a stale replica. Time appears to go backward. To prevent this anomaly, we need monotonic reads.

- Monotonic reads is weaker gurantee than strong consistency (as older data can still be returned), but it's a stronger gurantee than eventual consistency.

- Monotonic reads only means a user will not read older data after having previously read newer data.

- One way of achieving monotonic reads is to make sure that each user always makes their reads from the same replica (different users can read from different replicas).

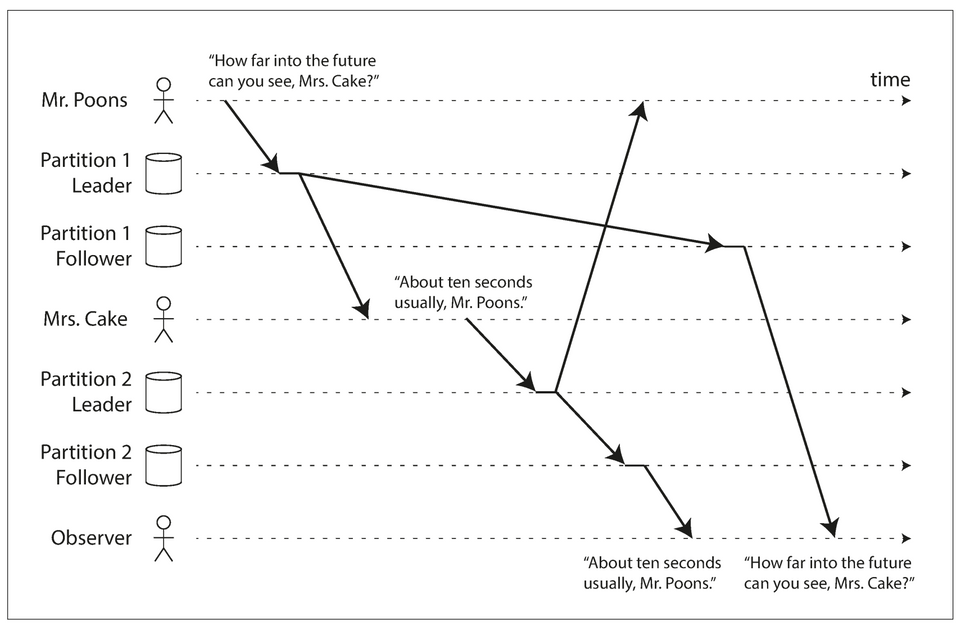

Consistent Prefix Reads

- If some partitions are replicated slower than others, an observer may see the answer before they see the question (violation of causality).

- Consistent prefix reads is a consistency guarantee that if a sequence of writes happens in a certain order, then anyone reading those writes will see them appear in the same order.

- This anomaly only affects partitioned databases. As a non-partitioned database is dependent on the leader and thus have only one write order.

- One way of achieving consistent prefix reads is to make sure that any writes that are causally related to each other are written to the same partition.

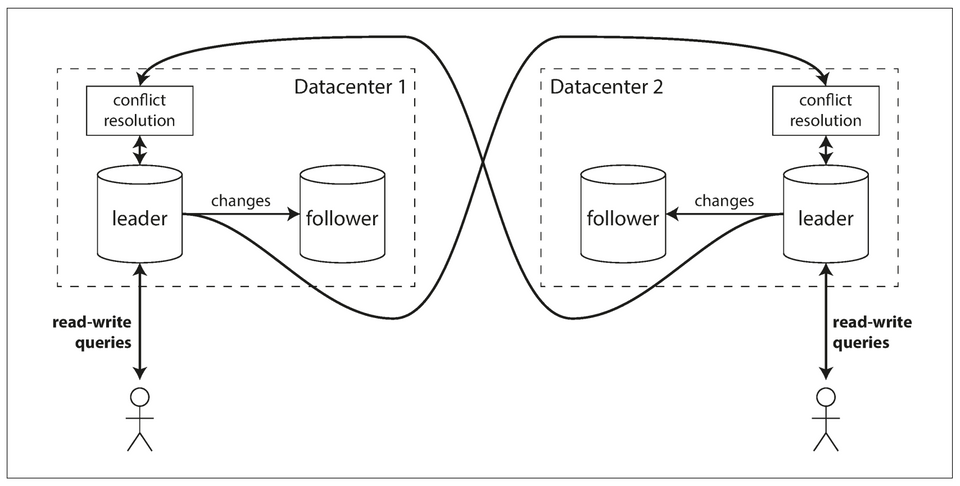

Multi-Leader Replication

- Multi-Leader replication (aka: master–master or active/active replication) allows more than one node to accept writes. In this setup, each leader simultaneously acts as a follower to the other leaders.

Use Cases for Multi-Leader Replication

1. Multi-datacenter operation

In a multi-leader configuration, you can have a leader in each datacenter. Within each datacenter, regular leader–follower replication is used; between datacenters, each datacenter’s leader replicates its changes to the leaders in other datacenters.

Multi-leader replication is an often retrofitted feature of databases, making them dangerous as they have suprising interactions with other database features.

External tools enabling multi-leader replication on relational databases:

- Tungsten Replicator for MySQL

- BDR for PostgreSQL

- GoldenGate for Oracle

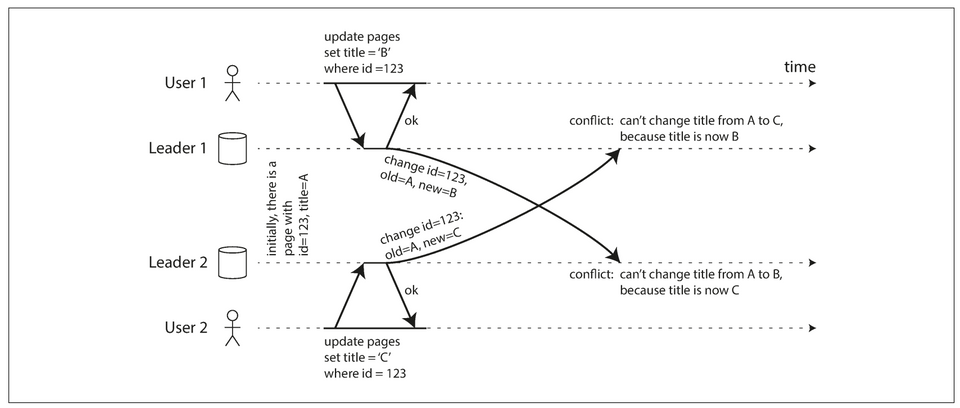

Major downside of multi-leader replication: the same data may be concurrently modified in two different datacenters, and those write conflicts must be resolved.

Comparison of single-leader vs multi-leader in a multi-datacenter environment:

Feature Single-leader Multi-leader Performance Added latency because every write goes over the internet to the datacenter with the leader. Inter-datacenter network delay can be hidden by writing to a local datacenter and asynchronously syncing with other datacenters. Tolerance of datacenter outages Failover to a follower in anothe datacenter Datacenters can continue operating independently Tolerance of network problems Sensitive to problems in the inter-datacenter link because writes are made usually made over this link With asynchronous replication, network problems are tolerable as writes can be processed locally

Clients with offline operation

- In this case, every device has a local database that acts as a leader (it accepts write requests), and there is an asynchronous multi-leader replication process (sync) between the replicas on all devices.

- CouchDB is designed for this mode of operation.

Collaborative editing

- Real-time collaborative editing applications allow several people to edit a document simultaneously.

- Document locks can gurantee no edit conflicts, but this disables simultaneous editing.

Handling Write Conflicts

Synchronous versus asynchronous conflict detection

- Conflict detection can be made synchronous — i.e: wait for the write to be replicated to all replicas before telling the user that the write was successful.

- However, this loses the main advantage of multi-leader replication: allowing each replica to accept writes independently.

Conflict avoidance

- The application can ensure that all writes for a particular record go through the same leader, to avoid conflicts. Each record can have a "home" leader.

- Conflict avoidance can breaksdown whenever you want to change a record's "home" leader. You have to deal with the possibility of concurrent writes on different leaders.

Converging toward a consistent state

- In a multi-leader configuration, there is no defined ordering of writes, so it’s not clear what the final value should be.

- Every replication scheme must ensure that the data is eventually the same in all replicas. Thus, the database must resolve the conflict in a convergent way, which means that all replicas must arrive at the same final value when all changes have been replicated.

- Some ways of achieving convergent conflict resolution:

- Give each write a unique ID (e.g: a timestamp, a UUID) and pick the write with the highest ID as the winner. Prone to data-loss.

- Give each replica a unique ID, and let writes that originated at a higher numbered replica always take precedence. Prone to data-loss.

- Somehow merge the values together—e.g., order them alphabetically and then concatenate them.

- Record the conflict in an explicit data structure that preserves all information, and write application code that resolves the conflict at some later time (perhaps by prompting the user).

Custom conflict resolution logic

Resolution can be app specific, thus most tools allow application code to handle conflicts. This code is run:

- On write: As soon as the database system detects a conflict in the log of replicated changes, it calls the conflict handler. Burcado does this.

- On read: Conflicting writes are stored and returned to the application whenever it tries to read te affected record. It can auto resolve the conflict, or prompt the user to do so. CouchDB does this.

Automatic Conflict Resolution

A few lines of research are worth mentioning:

- Conflict-free replicated datatypes (CRDTs) are a family of data structures for sets, maps, ordered lists, counters, etc. that can be concurrently edited by multiple users, and which automatically resolve conflicts in sensible ways. Some CRDTs have been implemented in Riak 2.0.

- Mergeable persistent data structures track history explicitly, similarly to the Git version control system, and use a three-way merge function (whereas CRDTs use two-way merges).

- Operational transformation is the conflict resolution algorithm behind collaborative editing applications such as Etherpad and Google Docs. It was designed particularly for concurrent editing of an ordered list of items, such as the list of characters that constitute a text document.

What is a conflict?

Editing the same field on the same record is an obvious conflict. But some conflicts are subtle: like the ones that violate some application constraints when two distinct records are edited by two independent leaders.

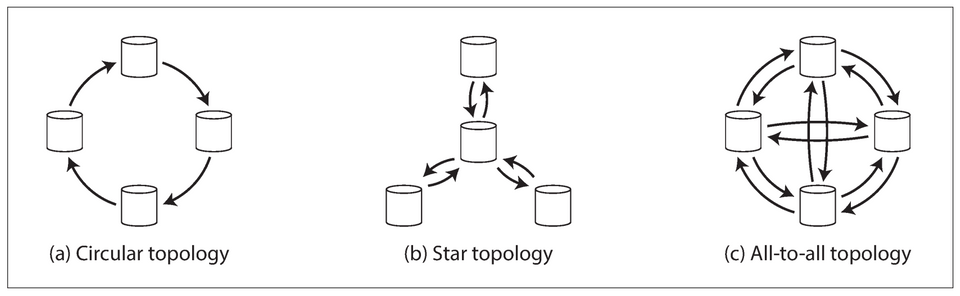

Multi-Leader Replication Topologies

- A replication topology describes the communication paths along which writes are propagated from one node to another.

- Three example topologies in which multi-leader replication can be set up.

- All-to-all topology: every leader sends its writes to every other leader.

- Circular topology: each node receives writes from one node and forwards those writes (plus any writes of its own) to one other node.

- Star topology: one designated root node forwards writes to all of the other nodes. The star topology can be generalized to a tree.

- To prevent infinite replication loops, each node is given a unique identifier, and in the replication log, each write is tagged with the identifiers of all the nodes it has passed through. Thus, a node can ignore a data change with its identifier.

Leaderless Replication

- Leaderless replication abandons the concept of a leader and allows any replica to directly accept writes from clients.

- Clients send each write to several nodes, and read from several nodes in parallel in order to detect and correct nodes with stale data.

- Amazon built Dynamo, its in-house datastore with leaderless replication. Riak, Cassandra, and Voldemort are open source datasources insipred by Dynamo.

- In some leaderless implementations, the client directly sends its writes to several replicas, while in others, a coordinator node does this on behalf of the client.

Writing to the Database When a Node Is Down

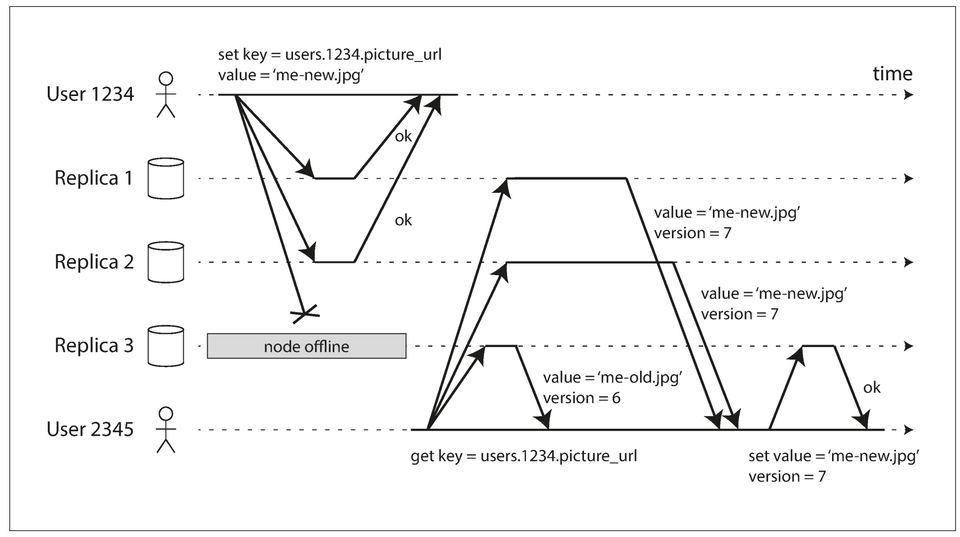

- A quorum write, quorum read, and read repair after a node outage.

- When a client reads from the database, it doesn’t just send its request to one replica: read requests are also sent to several nodes in parallel. The client may get different responses from different nodes; i.e: the up-to-date value from one node and a stale value from another.

Read repair and anti-entropy

Two mechanisms are often used in Dynamo-style datastores to ensure that eventually all the data is copied to every replica:

- Read Repair: When a client makes a read from several nodes in parallel, it can detect any stale responses, and update the stale node with fresher values. With only this mechanism, values that are rarely read may be missing from some replicas.

- Anti-entropy process: A background process that constantly looks for differences in the data between replicas and copies any missing data from one replica to another, in no particular order and probably with some delay.

Quorums for reading and writing

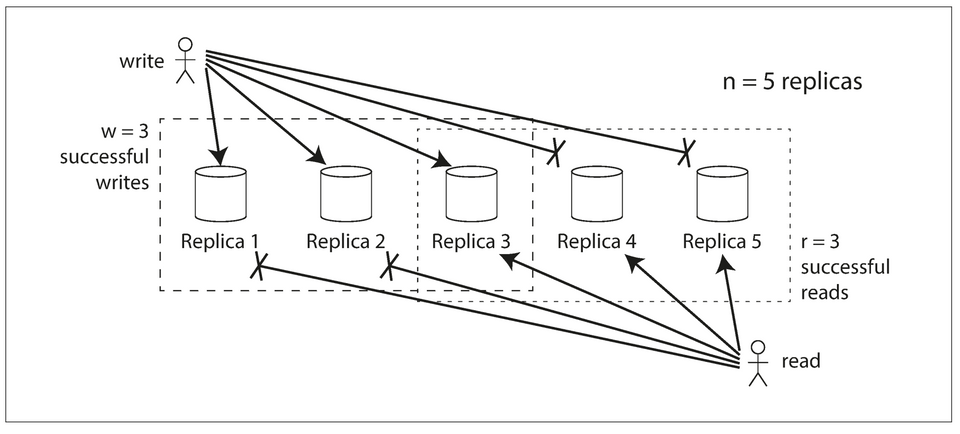

If there are

nreplicas, every write must be confirmed bywnodes to be considered successful, and we must query at leastrnodes for each read. As long asw + r > n, we expect to get an up-to-date value when reading, because at least one of thernodes we’re reading from must be up to date. Reads and writes that obey theserandwvalues are called quorum reads and writes. You can think ofrandwas the minimum number of votes required for the read or write to be valid.

- A common choice is to make

nan odd number (typically 3 or 5) and to setw = r = (n + 1) / 2(rounded up). - A workload with few writes and many reads may benefit from setting

w = nandr = 1. This makes reads faster, but has the disadvantage that just one failed node causes all database writes to fail. - The quorum condition,

w + r > n, allows the system to tolerate unavailable nodes as follows:- If

w < n, we can still process writes if a node is unavailable. - If

r < n, we can still process reads if a node is unavailable. - With

n = 3,w = 2,r = 2we can tolerate one unavailable node. - With

n = 5,w = 3,r = 3we can tolerate two unavailable nodes. - Normally, reads and writes are always sent to all

nreplicas in parallel. Them parameterswandrdetermine how many nodes we wait for — i.e: how many of thennodes need to report success before we consider the read or write to be successful.

- If

Limitations of Quorum Consistency

- If you have

nreplicas, and you choosewandrsuch thatw + r > n, you can generally expect every read to return the most recent value written for a key. This is the case because the set of nodes to which you’ve written and the set of nodes from which you’ve read must overlap. - Even with

w + r > n, depending on the implementation, there are edge cases where stale values can be returned. It's best to think of therandwvalues as adjusting the probability that a stale value will be read.

Monitoring staleness

- In systems with leaderless replication, there is no fixed order in which writes are applied, which makes monitoring more difficult; compared to leader-based replication where the replication lag can be measured based on the difference between a follower's local data and the leader's.

- Eventual consistency is a deliberately vague guarantee, but for operability it’s important to be able to quantify “eventual.”

Sloppy Quorums and Hinted Handoff

- Database designers face a trade-off:

- Is it better to return errors to all requests for which we cannot reach a quorum of

worrnodes? - Or should we accept writes anyway, and write them to some nodes that are reachable but aren’t among the

nnodes on which the value usually lives?

- Is it better to return errors to all requests for which we cannot reach a quorum of

- The latter is known as a

sloppy quorum: writes and reads still requirewandrsuccessful responses, but those may include nodes that are not among the designatedn“home” nodes for a value. - Once the network interruption is fixed, any writes that one node temporarily accepted on behalf of another node are sent to the appropriate “home” nodes. This is called

hinted handoff.

Sloppy quorums are particularly useful for increasing write availability: as long as any

wnodes are available, the database can accept writes. However, this means that even whenw + r > n, you cannot be sure to read the latest value for a key, because the latest value may have been temporarily written to some nodes outside ofn.

Multi-datacenter operation

Leaderless replication uses a similar to multi-leader replication in a multi-datacenter environment: writes across datacenters are usually asynchronous, and writes within a datacenter can be synchronous — this dampens the effect of faults in the cross-datacenter link.

The “happens-before” relationship and concurrency

An operation

Ahappens before another operationBifBknows aboutA, or depends onA, or builds uponAin some way. [...] Two operations are concurrent if neither happens before the other (i.e: neither knows about the other)

Chapter 10 — Batch Processing

Three types of systems:

Services (Online systems): Handle client requests as they arrive. Primary performance metric is response time.

Batch processing systems (offline systems): (Periodically) takes a large amount of input data, runs a job to process it, and produces some output data. Primary performance metric is throughput.

Stream processing systems (near-real-time systems): Somewhere between (1) & (2). Like (2), a stream processor consumes inputs and produces outputs (rather than responding to requests). However, a stream job operates on events shortly after they happen.

Bash Processing with Unix Tools

1216.58.210.78 - - [27/Feb/2015:17:55:11 +0000] "GET /css/typography.css HTTP/1.1"2200 3377 "http://martin.kleppmann.com/" "Mozilla/5.0 (Macintosh; Intel Mac OS X310_9_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/40.0.2214.1154Safari/537.36"For a file containing log lines (like above), a simple unix tool can be used to do log analysis.

For example, to find the five most popular pages:

1cat /var/log/nginx/access.log |2awk '{print $7}' |3sort |4uniq -c |5sort -r -n |6head -n 5Writing an equivalent simple program with a hash table is possible. The unix approach has some advantages -- sort for example uses multiple threads, and if a data doesn't fit in memory, it is written to disk.

The Unix Philoshophy

- Make each program do one thing well. To do a new job, build afresh rather than complicate old programs by adding new “features”.

- Expect the output of every program to become the input to another, as yet unknown, program. Don’t clutter output with extraneous information. Avoid stringently columnar or binary input formats. Don’t insist on interactive input.

- Design and build software, even operating systems, to be tried early, ideally within weeks. Don’t hesitate to throw away the clumsy parts and rebuild them.

- Use tools in preference to unskilled help to lighten a programming task, even if you have to detour to build the tools and expect to throw some of them out after you’ve finished using them.

Uniform Interface

All programs must use the same I/O interface to be able to connect any program’s output to any program’s input. In Unix, that interface is a file (or, more precisely, a file descriptor). A file is just an ordered sequence of bytes.

Unix programs read from stdin (default: keyboard) and write to stdout (default: screen). Because of the loose coupling, it is possible to pipe a program stdout to another program stdin, or have stdin/stdout be an arbirtrary file.

Running on a single machine is the biggest limitation of Unix tools.

MapReduce & Distributed Filesystems

Like Unix tools, MapReduce normally doesn't have side-effects -- It takes in input, and produces an output.

MapReduce jobs read and write files on a distributed filesystem -- HDFS (Hadoop Distributed Filesystem) in Hadoop's implementation of MapReduce.

HDFS is based on the shared-nothing principle.

HDFS consists of a daemon process running on each machine, exposing a network service that allows other nodes to access files stored on that machine.

A central server called the NameNode keeps track of which file blocks are stored on which machine.

Conceptually, it is one big filesystem that can use the space on the disks of all machines running the daemon.

Replication is used to tolerate machine and disk failures. Replication may mean simply several copies of the same data on multiple machines, or an erasure coding scheme such as Reed–Solomon codes, which allows lost data to be recovered with lower storage overhead than full replication.

MapReduce Job Execution

MapReduce is a programming framework with which you can write code to process large datasets in a distributed filesystem like HDFS.

The pattern of MapReduce is similar to the example above in Simple Log Analysis:

Read a set of input files, and break it up into records. In the web server log example, each record is one line in the log (that is, \n is the record separator).

Call the mapper function to extract a key and value from each input record. In the preceding example, the mapper function is

awk '{print $7}': it extracts the URL ($7) as the key, and leaves the value empty.Sort all of the key-value pairs by key. In the log example, this is done by the first sort command.

Call the reducer function to iterate over the sorted key-value pairs. If there are multiple occurrences of the same key, the sorting has made them adjacent in the list, so it is easy to combine those values without having to keep a lot of state in memory. In the preceding example, the reducer is implemented by the command

uniq -c, which counts the number of adjacent records with the same key.

Those four steps can be performed by one MapReduce job. Steps 2 (map) and 4 (reduce) are where you write your custom data processing code. Step 1 (breaking files into records) is handled by the input format parser. Step 3, the sort step, is implicit in MapReduce—you don’t have to write it, because the output from the mapper is always sorted before it is given to the reducer.

To create a MapReduce job, two callback functions implementation are needed: mapper & reducer.

Mapper: The mapper is called once for every input record, and its job is to extract the key and value from the input record. For each input, it may generate any number of key-value pairs (including none). It does not keep any state from one input record to the next, so each record is handled independently.

Reducer: The MapReduce framework takes the key-value pairs produced by the mappers, collects all the values belonging to the same key, and calls the reducer with an iterator over that collection of values. The reducer can produce output records.